Search and Sample Return

This project is based on Nasa's Sample Return Robot Challenge.

https://www.nasa.gov/directorates/spacetech/centennial_challenges/sample_return_robot/index.html

Udacity provided a simulator where we constructed a perception pipeline and control a rover by sending commands through a SocketIO connection. By this project, I experienced 3 major steps for a robot: Perception, Decision Making, and Action!

Perception

The perception pipeline handled the simulated camera image input from the rover. Generated the final rover-centric coordinates through a series of operation.

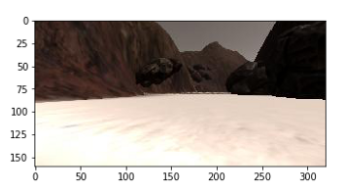

First operation conducted was perspective transform, converting the camera image to a top-down view

Next, we applied a color threshold to get a gray-scale image, separating navigable terrain from obstacles

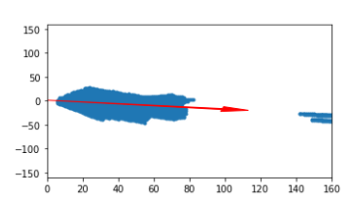

Last, we need to convert the (x, y) points to rover-centric coordinate. We then used mean angle (red arrow) to navigate and steer the robot.

The goal of this project is to control the rover and map at least 40% of the environment at 60% fidelity and locate at least one of the rock samples. To locate rock sample, I applied different color threshold specifically for the rock. Eventually, the project mapped 90% of the environment and reach 73% fidelity.

Future improvements

Still a lot of improvements to be made for this projects since there are so many things to try. The idea of following the wall provided by the peers in the course is really smart and is definitely one thing I can try it out. I'm happy for what I've done and I look forward to improving this and working on more new projects.